|

“Digital

Signal Processing” No. 3-2016 |

|

Power line detection on images using multi-agent approach

Alpatov B.A., e-mail: aitu@rsreu.ru

Babayan P.V., e-mail: aitu@rsreu.ru

Shubin N.J., e-mail: aitu@rsreu.ru

Ryazan State Radio Engineering Unversity (RSREU), Ryazan

Keywords: power line detection, wire detection, Radon transform, multi-agent system, pattern recognition.

Abstract

The problem of power line detection on the images is described in this paper. The algorithm based on Radon transform and multi-agent approach is suggested. The results of experimental research of the proposed algorithm are presented.

Unmanned aerial vehicles (UAVs) are finding increasing opportunities for application in various areas of human activity. Absence on board crew has to be compensated with a high control automation degree, or remote control. However, despite the obvious imperfection of modern onboard control systems in comparison with man, even the operator is not always able to correctly and quickly assess the situation and make a decision about the maneuver. The probability of a UAVs collision with landscape elements, various structures or other air objects severely limits the application of UAVs in both civil and military fields. Power lines in the urban environment are Particular danger for UAVs. Wires detection and estimation of their parameters with the purpose of their presence informing either of the operator or the autopilot is an actual task. The method of detecting lines in an image proposed in this paper can be useful in a number of other applications of the theory of image processing, for example, in aerial photography, mapping and medicine.

References

1. Toft P.A., "The Radon Transform: Theory and Implementation, PhD Thesis," Technical University of Denmark, 1996.

2. Volegov D.B., Gusev V.V., Yurin D.V., "Detection of straight lines on images based on the Hartley transformation: Rapid Hafa transformation" // Proceedings of the conference Graphicon 2006, 16th International Conference on Computer Graphics and its Applications, Russia, Novosibirsk, Akademgorodok, July 1-5, 2006. -S. 182-191. (in Russian)

3. Candamo J., Kasturi R., Goldgof D. and Sarkar S., "Detection of Thin Lines Using Low Quality Video from Low Altitude Aircraft in Urban Settings", IEEE Transactions on aerospace and electronic systems, vol. 45, No. 3 July 2009.

4. Li, Zhengrong, Liu, Yuee, Walker, Rodney A., Hayward, Ross F., & Zhang, Jinglan (2009) Towards automatic power line detection for a UAV surveillance system using pulse coupled neural filter and an improved Hough transform. Machine Vision and Applications, 21(5), pp. 677-686.

5. Merkin, DR, Introduction to the mechanics of a flexible thread. - Moscow: Nauka, 1980. - 240 p. (in Russian)

6. Sanders-Reed J.N., Yelton D.J., Witt C.C., Galetti R.R., “Passive Obstacle Detection System (PODS) for Wire Detection”, Proc of the SPIE, 7328, April, 2009.

7. Alpatov B.A., Babayan P.V., Shubin N.Yu., Radon Transformation in the Problem of Detecting Power Lines // Technical Vision in Control Systems-2016: Sat. Theses of scientific-techn. Conf. - M., 2016. - P. 64-65. [Electronic resource] tvcs2016.technicalvision.ru/file/tezis.pdf (reference date: June 20, 2016). (in Russian)

8. Wittich V.A., Skobelev P.O., Multiagent interaction models for constructing networks of needs and opportunities in open systems // Automation and Telemechanics. - 2003. - ¹1. - P. 162-169. (in Russian)

The complex of algorithms for detecting and tracking moving objects for on board technical vision system

A.B. Feldman, e-mail: aitu@rsreu.ru

D.Y. Erokhin, e-mail: erokhin.d.y@Gmail.com

Ryazan State Radio Engineering Unversity (RSREU), Ryazan

Keywords: : object detection, multiple object tracking, geometric transformations, technical vision system, Fourier transform.

Abstract

In this paper we propose a set of algorithms for detection and tracking of moving objects observed from the aircraft. The set includes three main elements: an algorithm for estimation and compensation of geometric transformations of images, an algorithm for detection of moving objects, an algorithm to tracking of the detected objects and prediction their position.

For the estimation and compensation of geometrical transform we use algorithm based on phase correlation which is performed sequentially for determining rotation and shift of the current frame. This algorithm provides subpixel precision and acceptable speed of work.

After the evaluation and compensation of geometric transformations we perform the detection of moving objects. In the first step we estimate background and intensity of noise component of the observed image. The background and standard deviation of image noise are estimated by means of the exponential temporal filtering. In the second step, we subtract estimation of the background from current fame Using the estimated intensity of noise component and result of subtraction we make a decision on the presence of the object at the current pixel. The result of this step is a list of segments which size satisfies the intended size of moving objects.

At the final stage the correspondence between segments extracted from the current frame and tracked objects is established. This algorithm allows us to track the merger and separation of segments. Also in this work we use Kalman filter to predict the position of the moving object. Also this approach allows tracking the occlusion of the object.

Processing of frame with size 640x480 pixels on computer with Intel Core 2 Duo T7100 is carried out in real time with the frequency of 25 Hz. Experimental results show a high efficiency, low computational complexity of algorithms and the real-time capability of algorithms on the onboard technical vision system.

References

1. Lu W. L., Little J. J. Simultaneous tracking and action recognition using the pca-hog descriptor //The 3rd Canadian Conference on Computer and Robot Vision (CRV'06). – IEEE, 2006. – Ñ. 6-6.

2. Dalal N., Triggs B. Histograms of oriented gradients for human detection //2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR'05). – IEEE, 2005. – Ò. 1. – Ñ. 886-893.

3. Lefevre S. et al. A new way to use hidden Markov models for object tracking in video sequences //Image Processing, 2003. ICIP 2003. Proceedings. 2003 International Conference on. – IEEE, 2003. – Ò. 3. – Ñ. III-117-20 vol. 2.

4. Gorry B. et al. Using mean-shift tracking algorithms for real-time tracking of moving images on an autonomous vehicle testbed platform //Proceedings of World Academy of Science, Engineering and Technology. – 2007. – Ò. 25. – ¹. 11. – Ñ. 1307-6884.

5. C. Papageorgiou, M. Oren, and T. Poggio, “A general framework for object detection,” in Proc. of IEEE Int. Conf. Comput. Vis., 1998

6. Stauffer C., Grimson W. E. L. Adaptive background mixture models for real-time tracking //Computer Vision and Pattern Recognition, 1999. IEEE Computer Society Conference on. – IEEE, 1999. – Ò. 2.

7. Bouwmans T., El Baf F., Vachon B. Background modeling using mixture of gaussians for foreground detection-a survey //Recent Patents on Computer Science. – 2008. – Ò. 1. – ¹. 3. – Ñ. 219-237.

8. Moo Yi K. et al. Detection of moving objects with non-stationary cameras in 5.8 ms: Bringing motion detection to your mobile device //Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops. – 2013. – Ñ. 27-34.

9. Reddy B. S., Chatterji B. N. An FFT-based technique for translation, rotation, and scale-invariant image registration //IEEE transactions on image processing. – 1996. – Ò. 5. – ¹. 8. – Ñ. 1266-1271.

10. Alpatov B. A., Babayan P.V., Balashov O.E., Stepashkin A.I., Obrabotka izobrazhenij i upravlenie v sistemah avtomaticheskogo soprovozhdeniya obektov. [Image processing and control in systems of automatic attending of objects.]– Ðÿçàíü, 2011. – 235 ñ.

11. Baklickij V. K., Bochkarev A. M., Musyakov M. P., Metody filtracii signalov v korrelyacionno-ehkstremalnyh sistemah navigacii [Methods of filtering signals in correlative and extremal systems of navigation] 1986. – 216 ñ.

12. Lowe D. G. Object recognition from local scale-invariant features //Computer vision, 1999. The proceedings of the seventh IEEE international conference on. – Ieee, 1999. – Ò. 2. – Ñ. 1150-1157.

13. Bay H. Et al. Speeded-up robust features (SURF) //Computer vision and image understanding. – 2008. – Ò. 110. – ¹. 3. – Ñ. 346-359.

14. Gonzalez R., Woods R. Digital Image Processing, 3-rd edition

15. Alpatov B., Babayan P. Multiple object tracking based on the partition of the bipartite graph //SPIE Security+ Defence. – International Society for Optics and Photonics, 2011. – Ñ. 81860B-81860B-6.

The comparison of three performance indicators for the object position estimation algorithm

S.E. Korepanov, e-mail: aitu@rsreu.ru

S.A. Smirnov, e-mail: aitu@rsreu.ru

V.V. Strotov, e-mail: aitu@rsreu.ru

Ryazan State Radio Engineering University (RSREU), Ryazan

Keywords: the object coordinate estimation algorithm, multiple template matching, SAD criterion, vision systems, performance indicators.

Abstract

This work deals with the multiple reference area based object tracking algorithm. In this paper we understand object tracking as an estimation of object center coordinates and size in the every frame of image sequence. The proposed algorithm is used as part of the complex tracking algorithm that is designed for tracking the objects with the greatly size variations. This approach consists of using several object tracking algorithms and a set of rules to switch between them. Generally, in this case the minimal size of the object can be one pixel. The maximal size can be much more than the sensed image size.

The regular switching rule for the multiple reference area based object tracking algorithm takes in account the actual image size. However, in several cases the multiple reference area based algorithm may produce unacceptable errors after the switching. This is because we cannot guarantee the selection of the required number of the suitable reference areas on the object at the moment of switching. The existing switching rules do not take in account the object properties as brightness, contrast, spatial inhomogeneity.

The goal of this work is the comparison of the several performance indicators for proposed algorithm. The first indicator is based on reference area temporal variability. The second indicator is based on differential criterial function analysis in the nearest neighborhood of the global minimum. The third indicator is based on the image gradient analysis. The comparison criteria are the switching errors and the computation complexity.

The results experimental examinations of these indicators were presented. The results show that the performance indicator #3 based on image gradient analysis has the best precision and the least computation complexity. It can be used for multiple reference area based algorithm efficiency estimation.

References

1. Strotov V.V., Korepanov S.E. The tracking of the object with great size variations // Vestnik of Ryazan State Radio Engineering University, – Ryazan, 2012. – Vol. 39. – Pp. 9-14. (in Russian)

2. Babayan P.V., Smirnov S.A. Object tracking using template matching algorithm simultaneously in TV and IR bands // Digital Signal Processing.– Vol. 4.–2010.– Pp. 18-21 (in Russian)

3. Alpatov B.A. Selyaev A.A., Stepashkin A.I. Digital image processing in object tracking task // Proc. of higher educational. Instrument making.– 1985.– Vol. 2.– Pp. 39-43. (in Russian)

4. Strotov V.V. Selection of the reference areas in multiple template geometric transformation parameters estimation algorithm. // Vestnik of Ryazan State Radio Engineering University, – Ryazan, 2009. – Vol. 28. – Pp. 93-96. (in Russian)

5. Babayan P.V., Smirnov S.A. Estimation of the prospective performance of the object tracking algorithms // GraphiCon 2010: 20th International conference on computer graphic and technical vision. St.Peterburgh.– 2010.– Pp. 329-330 (in Russian)

6. Alpatov B.A., Babayan P.V., Balashov O.E. and Stepashkin A.I. [The methods of the automatically object detection and tracking. Image processing and control], Radiotechnika, Moscow (2008). (In Russian)

Video matting with aid of reconstructed background

Erofeev M.V., e-mail merofeev@graphics.cs.msu.ru

Vatolin D.S., e-mail: dmitriy@graphics.cs.msu.ru

Keywords: matting, video processing, background reconstruction, background inpainting, matting Laplacian.

Abstract

Formally, matting is a problem of image decomposition into foreground image, background image and foreground transparency map. This problem is extremely important for such video and image editing problems as: background replacement, applying transform to background or foreground only, stereoscopic image generation. In this paper we propose video matting method based on Learning Based Matting method. We describe modification to base method which enabled us to use reconstructed background sequence as additional input. We also propose iterative method for spatio-temporal transparency map smoothing. Finally, we show that proposed approach outperforms 11 image and video matting methods in objective comparison.

References

1. Zheng Yuanjie, Kambhamettu C. Learning based digital matting // International Conference on Computer Vision (ICCV). — 2009. — P. 889–896.

2. Zachesov A.A., Erofeev M.V., Vatolin D.S. Ispolezovanie kart glybiny pri vosstanovlenii fona v vidioposledovatelinostyah // Novye informachionnye tehnologii v avtomatizirovannyeh sistemah: materialye nauchno-prekticheskogo seminara. – M.: MIEM NIU VSHE, 2015.

3. Metodika obyektivnogo sravneniya algoritmov matirovaniya video / Erofeev M.V., Gitman D.S., Vatolin D.S., Fedorov A.A. // Chifrovaya obrabotka signalov. – 2015/ - ¹ 3. – S. 53-59.

4. Video matting of complex scenes / Yung-Yu Chuang, Aseem Agarwala, Brian Curless et al. // ACM Transactions on Graphics (TOG). — 2002. — Vol. 21, no. 3. — P. 243–248.

5. Apostoloff Nicholas, Fitzgibbon Andrew. Bayesian video matting using learnt image priors // Computer Vision Pattern Recognition (CVPR). — Vol. 1. — 2004. — P. I–407–I–414.

6. Lee Sun-Young, Yoon Jong-Chul, Lee In-Kwon. Temporally coherent video matting // Graphical Models. — 2010. — Vol. 72, no. 3. — P. 25–33.

7. Bai Xue, Wang Jue, Simons David. Towards temporally-coherent video matting // International Conference on Computer Vision (ICCV). — 2011. — P. 63–74.

8. Video matting via opacity propagation / Zhen Tang, Zhenjiang Miao, Yanli Wan, Dianyong Zhang // The Visual Computer. — 2012. — Vol. 28, no. 1. — P. 47–61.

9. Corrigan David, Robinson S, Kokaram A. Video matting using motion extended grabcut // European Conference on Visual Media Production (CVMP). — 2008. — P. 3–3(1).

10. Rother Carsten, Kolmogorov Vladimir, Blake Andrew. Grabcut: Interactive foreground extraction using iterated graph cuts // ACM transactions on graphics (TOG) / ACM. — Vol. 23. — 2004. — P. 309–314.

11. Levin Anat, Lischinski Dani, Weiss Yair. A closed-form solution to natural image matting // IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI). — 2008. — Vol. 30, no. 2. — P. 228–242.

12. Video snapcut: Robust video object cutout using localized classifiers / Xue Bai, Jue Wang, David Simons, Guillermo Sapiro // ACM Transactions on Graphics (TOG). — 2009. — Vol. 28, no. 3. — P. 70:1–70:11.

13. Temporally coherent and spatially accurate video matting / Ehsan Shahrian, Brian Price, Scott Cohen, Deepu Rajan // Computer Graphics Forum. — 2014. — Vol. 33, no. 2. — P. 381–390.

14. Sindeev Mikhail, Konushin Anton, Rother Carsten. Alpha-flow for video matting // Computer Vision–ACCV 2012. — Springer, 2012. — P. 438–452.

15. Choi Inchang, Lee Minhaeng, Tai Yu-Wing.Video matting using multi-frame nonlocal matting laplacian // European Conference on Computer Vision (ECCV). — 2012. — P. 540–553.

16. Spatio-temporally coherent interactive video object segmentation via efficient filtering / Nicole Brosch, Asmaa Hosni, Christoph Rhemann, Margrit Gelautz // Pattern Recognition. — Vol. 7476. — 2012. — P. 418–427.

17. He Kaiming, Sun Jian, Tang Xiaoou. Guided image filtering // European Conference on Computer Vision (ECCV). — 2010. — P. 1–14.

18. Video matting via sparse and low-rank representation / Dongqing Zou, Xiaowu Chen, Guangying Cao, Xiaogang Wang // Proceedings of the IEEE International Conference on Computer Vision. — 2015. — P. 1564–1572.

19. Linear time euclidean distance transform algorithms / Heinz Breu, Joseph Gil, David Kirkpatrick, Michael Werman // Pattern Analysis and Machine Intelligence, IEEE Transactions on. — 1995. — Vol. 17, no. 5. — P. 529–533.

20. Wang Jue, Cohen Michael F. Optimized color sampling for robust matting // Computer Vision Pattern Recognition (CVPR). — 2007. — P. 1–8.

21. Fast video super-resolution via classification / Karen Simonyan, Sergey Grishin, Dmitriy Vatolin, Dmitriy Popov // International Conference on Image Processing (ICIP). — 2008. — P. 349–352.

22. Perceptually motivated benchmark for video matting / Mikhail Erofeev, Yury Gitman, Dmitriy Vatolin et al. // Proceedings of the British Machine Vision Conference (BMVC). — BMVA Press, 2015. — September. — P. 99.1–99.12. — URL: https://dx.doi.org/10.5244/C.29.99.

23. Improving image matting using comprehensive sampling sets / E. Shahrian, D. Rajan, B. Price, S. Cohen // Computer Vision Pattern Recognition (CVPR). — 2013. — P. 636–643.

24. Gastal Eduardo S.L., Oliveira Manuel M. Shared sampling for real-time alpha matting // Computer Graphics Forum. — 2010. — Vol. 29, no. 2. — P. 575–584.

25. A bayesian approach to digital matting / Yung-Yu Chuang, Brian Curless, David H. Salesin, Richard Szeliski // Computer Vision Pattern Recognition (CVPR). — Vol. 2. — 2001. — P. II–264–II–271.

26. Chen Qifeng, Li Dingzeyu, Tang Chi-Keung. KNN matting // IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI). — 2013. — Vol. 35, no. 9. — P. 2175–2188.

27. Lee Philip, Wu Ying. Nonlocal matting // Computer Vision Pattern Recognition (CVPR). — 2011. — P. 2193–2200.

28. Levin A., Rav Acha A., Lischinski D. Spectral matting // IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI). — 2008. — Vol. 30, no. 10. — P. 1699–1712.

29. http://www.adobe.com/en/products/aftereffects.html.

Objective quality assessment methodology for video background reconstruction

Bokov A.A., e-mail: abokov@graphics.cs.msu.ru

Vatolin D.S., e-mail: dmitriy@graphics.cs.msu.ru

Keywords: background reconstruction, video processing, objective quality assessment, quality benchmark.

Abstract

In its general form, video background reconstruction is usually defined as a task of plausible video region reconstruction that is marked with an input mask. Object removal is a typical example of background reconstruction. Several new methods were introduced over the past few years; however, no standard benchmark has yet been established. In this work we propose an objective background reconstruction quality benchmark that consists of several metrics that we demonstrate to have higher correlation with perceptual quality compared to prior approaches. Perceptual background reconstruction quality is quantitatively evaluated based on pairwise comparison of background reconstruction methods performed by over 300 human subjects.

References

1. A. Newson, A. Almansa, M. Fradet, Y. Gousseau, and P. Perez, “Video inpainting of complex scenes,” SIAM Journal on Imaging Sciences, pp. 1993–2019, 2014.

2. M. Granados, J. Tompkin, K. Kim, O. Grau, J. Kautz, and C. Theobalt, “How not to be seen—object removal from videos of crowded scenes,” Computer Graphics Forum, volume 31, pp. 219–228, 2012.

3. M. Ebdelli, O. Le Meur, and C. Guillemot, “Video inpainting with short-term windows: application to object removal and error concealment,” IEEE Transactions on Image Processing, pp. 3034–3047, 2015.

4. Y. Chen, Y. Hu, O. C. Au, H. Li, and C. W. Chen, “Video error concealment using spatio-temporal boundary matching and partial differential equation,” IEEE Transactions on Multimedia, pp. 2–15, 2008.

5. J. Koloda, J. Ostergaard, S. H. Jensen, A. M. Peinado, and V. Sanchez, “Sequential error concealment for video/images by weighted template matching,” IEEE Data Compression Conference, pp. 159–168, 2012.

6. J. Koloda, J. Ostergaard, S. H. Jensen, V. Sanchez, and A. M. Peinado, “Sequential error concealment for video/images by sparse linear prediction,” IEEE Transactions on Multimedia, pp. 957–969, 2013.

7. M. Erofeev and D. Vatolin, “Automatic logo removal for semitransparent and animated logos,” Proceedings of GraphiCon 2011, pp. 26–30, 2011.

8. W. Q. Yan, J. Wang, and M. S. Kankanhalli, “Automatic video logo detection and removal,” Multimedia Systems, pp. 379–391, 2005.

9. A. Mosleh, N. Bouguila, and A. B. Hamza, “Automatic inpainting scheme for video text detection and removal,” IEEE Transactions on Image Processing, pp. 4460–4472, 2013.

10. T. Shiratori, Y. Matsushita, X. Tang, and S. B. Kang, “Video completion by motion field transfer,” IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), pp. 411–418, 2006.

11. W. Hu, D. Tao,W. Zhang, Y. Xie, and Y. Yan, “A new low-rank tensor model for video completion,” arXiv preprint arXiv:1509.02027, 2015.

12. A. Mosleh, N. Bouguila, and A. B. Hamza, “Video completion using bandlet transform,” IEEE Transactions on Multimedia, pp. 1591–1601, 2012.

13. A. Mosleh, N. Bouguila, and A. B. Hamza, “Bandlet-based sparsity regularization in video inpainting,” Journal of Visual Communication and Image Representation, pp. 855–863, 2014.

14. S. You, R. T. Tan, R. Kawakami, and K. Ikeuchi, “Robust and fast motion estimation for video completion,” IAPR International Conference on Machine Vision Applications (MVA), pp. 181–184, 2013.

15. J. Benoit and E. Paquette, “Localized search for high definition video completion,” Journal of WSCG, pp. 45–54, 2015.

16. S. Ilan and A. Shamir, “A survey on data-driven video completion,” Computer Graphics Forum, pp. 60–85, 2015.

17. Blender. https://www.blender.org/.

18. Z. Wang, A. C. Bovik, H. R. Sheikh, and E. P Simoncelli, “Image quality assessment: from error visibility to structural similarity,” IEEE Transactions on Image Processing, pp. 600–612, 2004.

19. C. Barnes, E. Shechtman, A. Finkelstein, and D. Goldman, “Patchmatch: A randomized correspondence algorithm for structural image editing,” ACM Transactions on Graphics (TOG), 2009.

20. Z. Wang, E. P. Simoncelli, and A. C. Bovik, “Multiscale structural similarity for image quality assessment,” Conference Record of the Thirty-Seventh Asilomar Conference on Signals, Systems and Computers, pp. 1398–1402, 2003.

21. The Foundry Nuke. https://www.thefoundry.co.uk/products/nuke/. Zachesov A.A., Erofeev M.V., Vatolin D.S. Ispolezovanie kart glybiny pri vosstanovlenii fona v vidioposledovatelinostyah // Novye informachionnye tehnologii v avtomatizirovannyeh sistemah: materialye nauchno-prekticheskogo seminara. – M.: MIEM NIU VSHE, 2015.

22. Pixel Farm PFClean. http://www.thepixelfarm.co.uk/pfclean/.

23. A. Telea, “An image inpainting technique based on the fast marching method,” Journal of graphics tools, pp. 23–34, 2004.

24. J.-B. Huang, S. B. Kang, N. Ahuja, and J. Kopf, “Image completion using planar structure guidance,” ACM Transactions on Graphics (TOG), 2014.

25. L. L. Thurstone, “A law of comparative judgment,” Psychological review, 1927.

26. J. Herling and W. Broll, “High-quality real-time video inpainting with PixMix,” IEEE Transactions on Visualization and Computer Graphics, pp. 866–879, 2014.

27. K. Patwardhan and G. Sapiro, “Video inpainting under constrained camera motion,” IEEE Transactions on Image Processing, pp. 545–553, 2007.

Algorithm of objects thermal image formation

in case of radiometric observation

V. K. Klochko, e-mail: klochkovk@mail.ru

O. N. Makarova

S. M. Gudkov

A. A. Koshelev

The Ryazan State Radio Engineering University (RSREU), Russia, Ryazan

Keywords: radiometer, radiometric image, optical pattern, resolution capability.

Abstract

Unlike the thermal imagers accepting the radiation of the land surface blanket, radiometers allow to fix the radio thermal radiation of the objects hidden under a blanket and are to a lesser extent subject to atmospheric influences. However spatial resolution capability of radiometric systems considerably concedes to resolution capability of thermal imager and optical systems. The purpose of operation is development of objects thermal image formation algorithm in the radar millimetric range of waves with spatial resolution of the optical image.

The objectives are achieved due to joint processing of radiometric and optical images. For this purpose the optical image is scaled for compliance radiometric image. Then the optical image is segmented on subareas, uniform in temperature. The received segments are transferred to the radiometric image matrix and each segment receives average temperature. Temperature is transferred to levels of chromaticity.

As a result the color objects image with spatial permission of the optical image and information on objects radio brightness temperature in the millimetric range of waves turns out. Results of full-scale tests of an algorithm are shown. On images the objects outlines and their temperature in color are accurately visible. The offered algorithm can be used in the existing radiometric object tracking systems. The thermal image with spatial resolution of the optical pattern is result of algorithm operation.

References

1. Obrabotka izobrazhenij v geoinformacionnyh sistemah: ucheb. posobie / V.K. Zlobin, V.V. Eremeev, A.E. Kuznecov. Ryazan. gos. radiotekhn. un-t. Ryazan', 2006. 264 p.

2. SHarkov E.A. Radioteplovoe distancionnoe zondirovanie Zemli: fizicheskie osnovy: v 2 t. / T. 1. M.: IKI RAN, 2014. 544 p.

3. Kutuza B.G., Danilychev M.V., YAkovlev O.I. Sputnikovyj monitoring Zemli: Mikrovolnovaya radiometriya atmosfery i poverhnosti / M.: Lenard, 2016. 338 p.

4. Vasilenko G.I., Taratorin A.M. Vosstanovlenie izobrazhenij. M.: Radio i svyaz', 1986. 304 p.

5. Klochko V.K., Kuznecov V.P. Vosstanovlenie izobrazhenij ob"ektov po prorezhennoj matrice nablyudenij // Vestnik Ryazanskogo gosudarstvennogo radiotekhnicheskogo universiteta. 2016. no. 55. pp. 111 – 117.

6. Gonsales R., Vuds R., EHddins S. Cifrovaya obrabotka izobrazhenij v srede MATLAB. M.: Tekhnosfera, 2006. 616 p.

7. Matematicheskie metody vosstanovleniya i obrabotki izobrazhenij v radioteplooptoehlektronnyh sistemah / V.K. Klochko; Ryaz. gos. radiotekhn. un-t. Ryazan', 2009. 228 p.

8. Obrabotka izobrazhenij i upravlenie v sistemah avtomaticheskogo soprovozhdeniya ob"ektov: ucheb. posobie / B.A. Alpatov, P.V. Babayan, O.E. Balashov, A.I. Stepashkin; Ryaz. gos. radiotekhn. un-t. Ryazan', 2011. 236 p.

Bistatic SAR baseline estimation by interferogram analysis

N.A. Egoshkin., e-mail:foton@rsreu.ru

V.A. Ushenkin., e-mail:foton@rsreu.ru

The Ryazan State Radio Engineering University (RSREU), Russia, Ryazan

Keywords: interferogram, SAR imaging, digital elevation model, phase unwrapping, interferometric baseline.

Abstract

The problem of spaceborne InSAR baseline estimation by interferogram analysis is considered. Two main parameters, which depend on baseline and need to be estimated, are selected. They are multiplicative factor of phase dependence on relief height and multiplicative factor of flat relief phase. The algorithms of these parameters high-precision estimation, which don’t require interferogram phase unwrapping, are proposed.

References

1. Verba V.S., Neronskiy L.B., Osipov I.G., Turuk V.E. Radiolokatsionnye sistemy zemleobzora kosmicheskogo bazirovaniya (Spaceborne Earth Surveillance Radar Systems). Moscow: Radiotechnika, 2010. 680 p.

2. Egoshkin N.A., Ushenkin V.A. Sovmeshchenie vysokodetal'nyh izobrazheniy s ispol'zovaniem opornoy tsifrovoy modeli rel'efa pri interferometricheskoy obrabotke radiolokatsionnoy informatsii (DEM-assisted high resolution image coregistration for InSAR processing) / Vestnik of RSREU. 2015. No. 51. P. 72-79.

3. Ren K., Prinet V., Shi X., Wang F. Comparison of satellite baseline estimation methods for interferometry applications // Proc. of IGARSS’03. 2003. Vol. 6. P. 3821-3823.

4. Egoshkin N.A., Ushenkin V.A. Interferometricheskaya obrabotka radiolokatsionnoy informatsii na osnove kombinatsii metodov razvertyvaniya fazy (Interferometric processing of SAR data based on combining of phase unwrapping methods) // Vestnik of RSREU. 2015. No. 54-2. P. 21-31.

5. Ushenkin V.A. Interferometricheskaya obrabotka vysokodetal'noy informatsii ot kosmicheskih sistem radiolokatsionnogo nablyudeniya Zemli // Tezisy dokladov CHetvertoy mezhdunarodnoy nauchno-tekhnicheskoy konferentsii «Aktual'nye problemy sozdaniya kosmicheskih sistem distantsionnogo zondirovaniya Zemli». Moscow: “VNIIEM Corporation” JSC. 2016. P. 206-207.

Satellite images blurring and defocus correction

in the case of geometric distortion

N.A. Egoshkin, e-mail: foton@rsreu.ru

The Ryazan State Radio Engineering University (RSREU), Russia, Ryazan

Keywords: image improvement, defocus correction, blurring correction, remote sensing, point spread function, deconvolution.

Abstract

The problem of speed blurring correction and defocus of satellite images from modern scanning sensors is considered. It is shown that it’s need to consider the geometric distortion of images. Speed blurring correction is based on the analytical description of the signal acquisition process. Defocus correction is based on point spread function evaluation using point objects and multi-channel images of the same area. Methods of wavelet transform are used to improve the correction quality and simplify configuration of the algorithms.

References

1. Eremeev V.V. et al. Sovremennye tekhnologii obrabotki dannyh distantsionnogo zondirovaniya Zemli (Modern technologies of Earth remote sensing data processing). M.: Fizmatlit, 2015. 460 p.

2. Schowengerdt R.A. Remote Sensing: Models and Methods for Image Processing. Academic Press, 2007. 558 p.

3. Storey J.C. Landsat 7 on-orbit modulation transfer function estimation // Proceedings of the SPIE, 2001. Vol. 4540. pp. 50-61.

4. Xin M., Shuyan X., Guangxin L. Remote sensing image restoration with modulation transfer function compensation technology in-orbit // Proceedings of SPIE, 2013. Vol. 8768.

5. Eunsong O., Ki-Beom A., Seongick S., Joo-Hyung R. A modulation transfer function compensation for the geostationary ocean color imager based on the wiener filter // Journal of Astronomy and Space Sciences, 2013. Vol. 30, issue 4. pp. 321-326.

6. Voronin A.A, Egoshkin N.A., Eremeev V.V., Moskatinyev I.V. Geometricheskaya obrabotka dannyh kosmicheskih sistem global'nogo nablyudeniya zemli (Geometric data processing from Earth global observing space systems) // Vestnik of RSREU. 2009. No.1. P. 12-17.

7. Kuznetsov P.K., Semavin V.I., Soloduha A.A. Algoritm kompensatsii skorosti smaza izobrazheniya podstilayushchey poverhnosti, poluchaemogo pri nablyudenii Zemli iz kosmosa // Vest-nik Samarskogo GTU. 2005. No.37. P. 150-157.

8. Egoshkin N.A., Eremeev V.V., Moskvitin A.E. Kompleksirovanie izobrazheniy ot lineek fotopriyomnikov v usloviyah geometricheskih iskazheniy // TSifrovaya obrabotka signalov, 2012. No.3. P. 40-44.

9. Pratt W.K. Digital Image Processing. Hoboken, New Jersey, 2007. 807pp.

10. Egoshkin N.A., Eremeev V.V. Korrektsiya smaza izobrazheniy v sistemah kosmicheskogo nablyudeniya zemli // TSifrovaya obrabotka signalov, 2010. No.4. P. 28-32.

11. Jeon B, Kim H., Chang Y. A MTF compensation for satellite image using L-curve-based modified Wiener filter // Korean Journal of Remote Sensing, 2012. Vol 28, pp. 561-571.

12. Mallat S. A Wavelet Tour of Signal Processing. Academic Press, 1998. 550p.

13. Donoho D. L. Nonlinear solution of linear inverse problems by Wavelet-Vaguellete Decomposition // Appl. Comp. Harm. Anal., 1995. Vol. 2, pp. 101-126.

14. Neelamani R., Choi H., Baraniuk R. Wavelet-based deconvolution for ill-conditioned systems // IEEE Transactions on Image Processing, 1999.

15. Ljung L. System Identification. Theory for the User. PTR Prentice Hall, Upper Saddle River, 1999. 609 p.

Method and system for spoofing detection and apparatus of video image

I.N. Efimov, e-mail: Mr.Efimov.IN@gmail.com

A.M. Kosolapov, e-mail: kam6377@mail.ru

N.A. Efimov, e-mail: efimov.nikolai@mail.ru

Samara State Technical University (SSTU), Russia, Samara

Keywords: method of authentication recognizable, dispersal of brightness, model of attacks, person’s biometric identification system.

Abstract

A description, advantages and disadvantages of the original method of authentication recognizable object, based on the evaluation of repeated dispersal of brightness conjugate pixels of the object image. The model of attacks on a person's biometric identification system. Possible action by malicious deception systems by spoofing.

The present decision will find application in smaller IT environments (for example, in distance education) to detect spoofing attacks. Implement the described solution into existing control systems without specialized equipment. Necessary lighting device, fluorescent or infrared light.

References

1. Born M. Principles of Optics / M. Born, E. Wolf // Science. - 1973.- p. 720.

2. Efimov I.N., Kosolapov A.M. Classification of methods of authentication recognizable object // Collection of materials of internanative scientific-technical conference "Perspective Information Technologies". - Samara. - 2016. - pp.109-112.

3. Ivanov V.P., laborers A., Polishchuk G.M. Three-dimensional computer graphics // Radio and communication. - 1995.- p.224.

4. Kostylev N.M., Gorevoy A.V. Module detection vitality facial skin reflectance spectral characteristics of human // Journal of Engineering Science and Innovation. - 2013. - ¹ 9 - p.13.

5. Kostylev N.M., Trushkin F.A., Kolyuchkin V.Y. Detection of human vitality of the skin on the spectral characteristics of the person // Vestnik MSTU. N. Bauman. "Instrument" series. - 2012. - ¹ 2 - pp 75-85.

6. Pustynsky J.H., Zaitseva E.V. The calculation of the image luminance signal and the number of electrons in a television transmitter on the CCD // Reports TUSUR. - 2009. - ¹ 2 - pp. 5-10.

7. Ruczaj A.N. Model attacks and protection of biometric recognition systems, speaker // Reports TUSUR. - 2011. - ¹1 - pp. 96-100.

8. Efimov I.N., Kosolapov A.M. The method and the bump image recognizer face: Pat. RU 2518939 the applicant and the patentee VPO "Samara State University of Railway Transport". - ¹ 2013109943; appl. 05.03.2013; publ. 04.11.2014.

9. Chingovska I., Chingovska I., Anjos A., Marcel S. On the Effectiveness of Local Binary Patterns in Face Anti-spoofing // Biometrics Special Interest Group, 2012 BIOSIG - Proceedings of the International Conference.- 2012 .

10. Chingovska I., Switzerland I., Anjos A., Marcel S. Anti-spoofing in action: Joint operation with a verification system // Computer Vision and Pattern Recognition Workshops (CVPRW). - 2013. - pp. 98-104.

11. Duc N.M. Your face is not your password // Black Hat Conference. - 2009. - pp. 1-16.

12. Freitas P.T., Pereira F., Anjos T., Martino A., Mario J., Sebastien M. LBP- TOP Based Countermeasure against Face Spoofing Attacks // International Workshop on Computer Vision With Local Binary Pattern Variants - ACCV. - 2013. - pp. 121-132.

13. Housam, K.B. Face spoofing detection based on improved local graph structure // IEEE Computer Society. - 2014.

14. Maatta J., Hadid A., Pietikainen M. Face spoofing detection from single images using micro-texture analysis // Biometrics (IJCB), International Joint Conference on Biometrics Compendium, IEEE. - 2011.

15. Plataniotis K.N., Venetsanopoulos A. N. Color image processing and applications / K. N. Plataniotis, A. N. Venetsanopoulos - Engineering Monograph: Springer Science & Business Media. - 2000 – p.65.

16. Ratha N.K., Connell J. H., Bolle R. M. Enhancing security and privacy in biometrics-based authentication systems // IBM Syst. J. - 2001. - T. 40 - ¹ 3 - pp. 614-634.

17. Yan J., Liu S., Lei Z. A face antispoofing database with diverse attacks / J. Yan, S. Liu, Z. Lei // Biometrics Compendium, IEEE. - 2012. - pp. 26-31.

Desing of projection data smoothing filters for two-dimensional tomography

A. V. Likhachov, e-mail: ipm1@iae.nsk.su

Institute of Automation and Electrometry of the Siberian Branch of RAS (IAE SB RAS) Russia, Novosibirsk

Keywords: two-dimensional tomography, correlation function of noise, projection data smoothing.

Abstract

The paper is devoted to the actual problem of suppression of noise in projection data used for topography reconstruction. Two-dimensional statement is considered in the ray approximation for parallel scanning scheme. In this case the function of interest is calculated from the formula of the Radon transform inversion. At its algorithmic implementation, one multiplies the spectrum of each projection by the module of frequency (so-called ramp-filtering procedure), thereby intensifying high frequency component of the noise. According to the theorem of Wiener-Khintchine, the power spectral density of a stationary random process is the Fourier transform of its correlation function. Thus the last has an effect on the result of the reconstruction. The author considered this issue earlier in [5], where it was found that among the considered data distortions having the same variance, the smallest value of the reconstruction error occurs for Gauss noise. Therefore, it is proposed to process the one-dimensional projections with the filter, providing output random component to be close to Gauss noise.

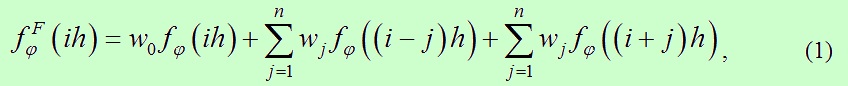

This paper considers the following symmetric digital filter

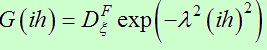

where h is a sampling step of one-dimensional projections. The values of coefficients wj are determined from the condition of minimal discrepancy between the correlation function of the noise after filtering (1) and given function

.

.

Under the assumption that the projection data contain centered additive white noise, the following method to calculate has been developed. The equality (1) is sequentially multiplied by

![]()

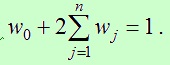

for j = 0, 1, 2, …, n. The mathematical expectation is determined from left and right parts of each obtained equation. The values of the correlation function of the filtered noise resulting in the left parts are replaced with the values of the function G(ih). The obtained by such way system of equations is solved by enumerative technique with step dw on several variables, including w0, which values are taken from the interval [1/(2n+1)+dw; 1-dw]. The other variables are expressed through them by means of relatively simple relations. The solution satisfying to the condition wn ≤ wn-1 ≤ ... ≤ w1 < w0 is selected only. The obtained coefficients are multiplied by normalization constant to ensure the equality

In the present investigation the coefficients wj were calculated up to n=7 inclusive. In this case the enumerative technique was carried out on the values of two – four variables.

The numerical simulations were carried out. The length of the filter being equal to 2n+1 points was varied from five to fifteen. The following important result was obtained: the best reconstruction accuracy is achieved when the half-width of the Gauss curve used for the calculation of wj is equal to n steps of the grid. The computational experiment also shows that the proposed method improves the reconstruction quality, as compared with averaging or median on the same number of points. In particular, when the standard deviation of the noise is in the range from 5 to 15% of the projection data maximum value, it leads to error at 14÷15 times less than that the averaging gives (the length of the both filters is nine points).

References

1. Herman G. T. Image reconstruction from projections: The fundamentals of computerized tomography. New-York: Academic Press, 1980. 316 pp.

2. Natterer F. The mathematics of computerized tomography. Stuttgart: John Wiley & Sons, 1986. 222 pp.

3. A. N. Tikhanov, V. Ya. Arsenin, A. A. Timonov. Mathematical problems of computerized tomography. M: Nauka, 1987. 158 pp.

4. S. M. Ritov. Introduction to statistical radio physics. Pt. 1. Random processes. M: Nauka, 1976. 484 pp.

5. A. V. Likhachov, Yu. A. Shibaeva. Dependence of tomography reconstruction accuracy on noise correlation function involved in projection data // Digital signal processing. 2015. ¹ 2. pp. 18-34.

6. Ramachandran G. N., Lakshminarayanan A. V. Three-dimensional reconstruction from radiographs and electron micrographs: application of convolutions instead of Fourier transforms // Proc. Nat. Acad. Sci. U.S. 1971. V. 68. P. 2236-2240.

7. Shepp L. A., Logan B. F. The Fourier reconstruction of a head section // IEEE Trans. Nucl. Sci. 1974. V. 21, No. 3. P. 21-43.

8. V. A. Erokhin, V. S. Shnayderov. Three-Dimensional reconstruction (computerized tomography). Numerical simulations. Preprint ¹ 23. Leningrad: Izd-vo LNIVC, 1981. 35 pp.

9. A. V. Likhachov. Investigation of filtration in tomography algorithms // Avtometriya. vol. 43, ¹ 3. pp. 57-64.

10. A. V. Likhachov. Double-filtration algorithm for two-dimensional tomography // Mathematical modeling. 2009. vol. 21, ¹ 8. pp. 21-29.

11. V. A. Vasilenko. Spline functions: theory, algorithms, programs. Novosibirsk: Nauka, 1983. 214 pp.

Adaptive image contrasting algorithm

S.S. Zavalishin, e-mail: ss.zavalishin@gmail.com

post-graduate student, RSREU, Ryazan

Keywords: image processing, document processing, contrast enhancement.

Abstract

In this paper we propose an adaptive contrasting algorithm that exploits image structure. In contrast to existing methods, proposed one applies preliminary segmentation in order to determine contrast curves for each image area independently, which make it possible to take into account underexposed and overexposed areas. Smooth contrast transitions between nearby regions are provided using a special graph, which store algorithm parameters and adjust them.

References

1. Margulis D. Photoshop for professionals: Management in color of correct. 2007.

2. Horn. B. Robot vision // MIT Press. 1986.

3. Sobol. R. Improving the Retinex algorithm for rendering wide dynamic range photographs // Journal of Electronic Imaging, Vol. 13, ¹ 1.

4. Tao L., Asari. V. Modified Luminance based MSR for Fast and Efficient Image enhancement // Proc. of IEEE Applied Imagery Pattern Recognition Workshop. 2003.

5. Brajovic V. Brightness Perception, Dynamic Range and Noise: a Unified Model for Adaptive Image Sensors // Proc. of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. 2004.

6. Safonov I.V. Automatic correction of exposure problems in photo printer // Proc. of IEEE ISCE’06. 2006.

7. Moroney N. Local color correction using non-linear masking // â Proc. of 8th Color Imaging Conference. 2000.

8. Chesnokov V. Image enhancement method and apparatus therefor // Patent WO 02/089060. 2002.

9. Ramirez A., Rivera B. O. Content-Aware Dark Image Enhancement Through Channel Division // IEEE Transactions on Image Processing. ¹ 21(9), C. 3967-3980. 2012.

10. Zuiderveld K. Contrast Limited Adaptive Histogram Equalization // Graphic Gems IV, C. 474–485. 1994.

11. Kurilin I. et al. Fast algorithm for visibility enhancement of the images with low local contrast // IS&T/SPIE Electronic Imaging. – International Society for Optics and Photonics, 2015. – Ñ. 93950B-93950B-9.

12. Otsu N. A. threshold selection method from gray-level histograms // Automatica, ¹ 11(285-296). 1975. C. 23-27.

13. Felzenszwalb P. F., Huttenlocher D. P. Efficient graph-based image segmentation // International UDC 004.932.4, Journal of Computer Vision. – 2004. – Ò. 59. – ¹. 2. – Ñ. 167-181.

14. Uijlings J. R. R. et al. Selective search for object recognition // International journal of computer vision. – 2013. – Ò. 104. – ¹. 2. – Ñ. 154-171.

15. Michelson, A.A. Studies in Optics // University of Chicago. 1927.

16. Wang Z. et al. Image quality assessment: from error visibility to structural similarity // Image Processing, IEEE Transactions on. – 2004. – Ò. 13. – ¹. 4. – Ñ. 600-612.

17. Funt B., Ciurea F., McCann J. Retinex in MATLAB™ // Journal of electronic imaging. – 2004. – Ò. 13. – ¹. 1. – Ñ. 48-57.

Increasing of the speed of the algorithm of fractal coding the halftone images

Zykov A. N., e-mail: alexzikov@gmail.com

Keywords: image compression, fractal coding, image analysis, local binary patterns.

Abstract

The offered to your attention work describes using Local Binary Pattern (LBP) in the task of fractal coding grayscale images. Based on local binary models, local binary patterns offer an effective way to analysis of the image and texture are its effective characteristic. This work is aimed at providing new ideas for reducing the time of fractal coding, in particular, using the classification of areas of an image on the basis of using operator the local binary patterns.

References

1. Barnsley, M., and Hurd L., Fractal Image Compression // Wellesley, MA: A.K.Peters, Ltd., 1993.

2. Jacquin A.E. Fractal image coding: A review // Proceedings of the IEEE, 1993. Vol. 81(10). P. 1451-1465.

3. Welstead S., Fractal and Wavelet Image Compression Techniques // Tutorial Texts in Optical Engineering, SPIE Publications, 1999. Vol 40.

4. Ojala, T., Pietikainen, M., Maenpaa, T. Multi resolution gray-scale and rotation invariant texture classification with local binary patterns // IEEE Transactions on Pattern Analysis and Machine Intelligence, 2002, Vol.24, ¹.7. P. 971–987.

5. Ahonen, T., Hadid, A., and Pietikainen, M. Face recognition with local binary patterns // Proc. European Conf. Computer Vision, 2004, P. 469–481.

6. Takala, V., Ahonen, T., Pietikainen, M. Block-based methods for image retrieval using local binary patterns // Scandinavian Conference on Image Analysis, Joensuu, Finland, 2005, P. 882–891.

7. https://sipi.usc.edu/database/database.php?volume=misc.

8. https://ru.wikipedia.org/wiki/PSNR.

9. https://ru.wikipedia.org/wiki/SSIM.

Segmentation algorithms optimization with parallel computing techniques for image analysis systems

V.N. Stepanov, e-mail: vnstepanov@yandex.ru

V.A. Trapeznikov Institute of Control Sciences of Russian Academy of Sciences, Russia, Moscow

Keywords: parallel computing, image processing, segmentation, GPGPU.

Abstract

The paper presents an analysis of the General Processing on Graphical Processing Unit (GPGPU) in relation to image processing (segmentation) for analyzers of of medical and biological micro-objects. Adaptation of processing algorithms for parallelization and increase in execution speed are shown.

Studies presented show that the image processing is well parallelizable task.

Application of parallel algorithms using the GPGPU technology has allowed to accelerate the segmentation of images up to 25 times.

Using quick versions of functions with reduced accuracy does not affect the result of the segmentation - per-pixel comparison of segmented images showed that the result does not differ from that in the calculations with complete accuracy. Average acceleration in this case is 15.9%.

It is possible to increase the speed of calculations by 19% by applying of optimized color conversion algorithm.

Thus, parallel processing on the equipment used in this work (GPU NVidiaGeForce 970) allows for the segmentation of real images (usually no more than 1 MPix) at a rate close to real time. Latency is 170ms. This delay allows to eliminate the preview window when setting the segmentation thresholds, as previously, and output the result directly on the image.

References

1. AaftabMunshi; Benedict R. Gaster; Timothy G. Mattson; James Fung; Dan Ginsburg. OpenCLProgramming Guide. – Addison-WesleyProfessional, 2011.

2. AaftabMunshi. The OpenCL Specification 1.0.48. KronosGroup. 2009.

3. Popova G.M., Stepanov V.N., Druzhinin J.O., Diatchina I.F. Multifunctional information-computer complex for image analysis and diagnostics // Information technology and computer systems. 2010. ¹ 4. P. 25-37.

4. Dimashovan M.P. GPU Implementation of Mean Shift image segmentation algorithm // Proceedings of X All-Russian Conference "High-performance parallel computing on cluster systems" 2010. V.1 – P. 214

5. Drindik R.V., Privalov M.V. Parallelization of evolutionary methods of three-dimensional medical image segmentation.// Information control systems and computer monitoring. – Donetsk: DonNTU, 2012. - P. 480 - 484.

6. Popova G.M., Stepanov V.N., Druzhinin J.O. Interactive processing method for color images of the biological objects to get their semantic description // System analysis and management in biomedical systems. 2009. V. 8, ¹ 3. P. 741-746.

7. Popova G.M., Druzhinin J.O., Stepanov V.N., Diatchina I.F., Chazova N.L., Berschanskaia A.M., Melnikova N.V. Quantitative diagnosis of cancer of the prostate using a computer analyzer «Morpholog-Net» // System analysis and management in biomedical systems. 2006. V. 5, ¹ 4. P. 943-954.

Filtration of digital images based on autoencoder

Ipatov A.A., e-mail: artoymipatov@gmail.com

Volokhov V.A., e-mail: volokhov@piclab.ru

Priorov A.L., , e-mail: andcat@yandex.ru

Apalkov I.V, e-mail: ilya@apalkoff.ru

Keywords: image filtering, machine learning, feedforward neural network, autoencoder.

Abstract

This paper presents the implementation and study of noise reduction algorithm, based on autoencoder. Autoencoder is a kind of feedforward neural network, which is unsupervised learning algorithm. Standard dataset were used to test the proposed filtering algorithm. Additive white Gaussian noise is considered as a noise model. This paper presents the numerical and visual results, showing the main features of considered algorithm.

References

1. Katkovnik V., Foi A., Egiazarian K., Astola J. From local kernel to nonlocal multiple-model image denoising // Int. J. Computer Vision. 2010. V. 86, ¹ 8. P. 1–32.

2. Marsland S. Machine learning: an algorithmic perspective. Chapman and Hall, 2009.

3. Vincent P. Stacked denoising autoencoders: learning useful representations in a deep network with a local denoising criterion // J. Machine Learning Research. 2010. V. 11. P. 3371–3408.

4. Muresan D.D., Parks T.W. Adaptive principal components and image denoising // Proc. IEEE Int. Conf. Image Processing. 2003. V. 1. P. 101–104.

5. Priorov A., Tumanov K., Volokhov V. Efficient denoising algorithms for intelligent recognition systems // In: Favorskaya M., Jain L.C. (eds.) Computer Vision in Control Systems-2, Intelligent Systems Reference Library, Springer International Publishing. 2015. V. 75. P. 251–276.

6. The UGR-DECSAI-CVG image database, http://decsai.ugr.es/cvg/dbimagenes.

7. Selomon D. Compression of data, image and sould. – M.: Tehnosvera, 2004.

8. Wang Z., Bovik A.C., Sheikh H.R., Simoncelli E.P. Image quality assessment: from error visibility to structural similarity // IEEE Trans. Image Processing. 2004. V. 13, ¹ 4. P. 600–612.

9. Starck J.-L., Candes E.J., Donoho D.L. The curvelet transform for image denoising // IEEE Trans. Image Processing. 2002. V. 11, ¹ 6. P. 670–684.

10. Buades A., Coll B., Morel J.M. Nonlocal image and movie denoising // Int. J. Computer Vision. 2008. V. 76, ¹ 2. P. 123–139.

11. Malla S. A wavelet tour of signal processing. Academic Press, 1999.

12. Donoho D.L., Johnstone I.M., Keryacharian G., Picard D. Wavelet Shrinkage: Asymptopia? // J. R. Statist. Soc. B. 1995. V. 57, ¹ 2. P. 301–369.

If you have any question please write: info@dspa.ru